The need for security features

When color photocopiers and desktop digital printers became mainstream, the issue of high-quality clones became the threat for value documents and banknotes. Suddenly the barrier to counterfeiting was thrown down and anybody from home could produce a convincing replica of an ID or a note.

The industry response was found both in anti-copying schemes (often embedded in the hardware) and increased physical security on the documents: holograms, color-shifting inks and many other features were adopted because photocopying would not restitute their characteristics.

The digital shift

In more recent times, the digitalization paradigm allowed for illicit manipulation of digital content, again through means available to the public (think Photoshop and the accessibility of data on the Web).

The new response was to develop digital tools to recognize patterns of manipulation and forgery (stochastic incoherence, demosaicing irregularities, compression artifacts, etc.) as well as methods to modify the content at the source (or “signing” it) so that any successive alteration could be easily detected: digital watermarking and more recently cryptographic hashing are the most known examples.

Digital watermarking involves embedding unique identifiers, either imperceptible or visible, into digital content. These watermarks can serve to verify the origin and authenticity of the content and to detect any unauthorized alterations since the distortion of the carrier signal (the content) is in principle not reversible.

Cryptographic hashing, on the other hand, generates a unique digital “fingerprint” or message digest for a piece of digital content, i.e. a unique identifier. If even a single bit of the content is altered, the resulting hash value will change unpredictably, allowing for the verification of data integrity. By comparing the original hash value with a newly generated one, it can be confirmed whether the content has been modified during transmission or storage.

A new evil? The rise of AI

Such threats are certainly still present today but now we are faced with a new, additional paradigm in security: Artificial Intelligence.

We won’t indulge here in discussions about the good and evil of such a technological shift, let’s just agree that AI is here now and it is here to stay.

There are two main aspects of this technology that we believe are impactful for the security industry.

The first aspect is simply the evolution of the original mentioned threats: AI can be leveraged to modify existing content with a level of sophistication that is unprecedented and likely to improve rapidly in the next future. This means that, on one hand, the existing detection solutions must also be improved and kept up-to-date constantly (AI can be leverage for removing digital watermarks for instance), on the other hand new tools must be developed to recognize and detect “signatures” of AI meddling, which are different from human’s and possibly specific to the AI model employed.

An example of a content protection approach is the one from C2PA, Coalition for Content Provenance and Authenticity, promoted by Adobe, Google, Microsoft Sony and other hardware and software firms (Overview – C2PA), whose goal is to provide a mechanism to verify the origin of a digital content. Their solution is based on adding cryptographic metadata to the content. An example could be a photograph taken by a smartphone with a CP2A-enabled camera, the picture will be linked to a manifest which includes some information as well as a thumbnail; the manifest is the signed cryptographically and embedded into the picture file. C2PA operates all along the complete digital edition chain, integrating information describing what happens to the image in editing step, like for instance, a photoshop cropping.

That could also work for a digital document such a Digital ID or a birth certificate, or a government-issued media such as a presidential audio/video statement.

The challenges of such an approach lie in the fact that it requires a very high level of interoperability and standardization to be effective, it is a resource-heavy system and it won’t be effective on existing content that has not been signed (unless that is re-processed entirely).

A bigger threat

But a second aspect of the AI impact is arguably the most perturbing: AI’s generative capabilities. We are all now familiar with the issue of Deepfakes, as they are spread over social networks and digital media outlets, often as jokes but more and more as misinformation tools with political agendas behind them.

AI can modify existing media but also generate, after a suitable training, entire new contents such as a photorealistic image or a credible video; and this simply from a user text prompt.

A couple of examples of fraudulent usage of generative AI, the first from the press and the second from my own experience:

Very recently the Italian medias have been shocked by a fraud at the expense of some well-known Italian VIP, including politicians and business men (La truffa a nome di Crosetto). The scheme was simple, with the voice of Defense Ministry Guido Crosetto cloned by an AI and put to use, first to collect personal mobile numbers of various VIP, and then to call directly those VIP and ask them to wire money in order to free some imaginary hostages held prisoners in Iran, Siria and other sensitive countries.

The money to pay the ransom was to be wired on foreign accounts with a guarantee of reimbursement by the Italian government. In at least one case, the money was indeed paid for what amount to millions of euros.

The second example, much less dramatic but equally emblematic, happened to me at a business dinner. After a pleasant meal, one of the guests extracts his smartphone and asks his AI agent to generate the restaurant bill for the dinner. He simply input the name of the Restaurant, tells it the date and number of guests and in a few seconds a realistic image of a restaurant bill is created, looking like a smartphone snapshot of the real thing and also a little worn-out like it had been held in a pocket for some time.

When we asked him what he was doing, he said that he wanted to show me that he could now use such a picture to generate an expense note in his corporate ERP and get reimbursed for a dinner he never paid for (there are actually websites already dedicated to receipt generation…).

What do we learn

As trained AI models are already available to the public, and become increasingly advanced, it is not difficult to see here the same disruption paradigm as the color photocopier was.

“In past years, the training costs of generative models were prohibitively high, and only a few industrial players had the capacity to create truly deceptive models. However, recent developments have changed this landscape: several platforms—such as Civit.a (Civitai: The Home of Open-Source Generative AI)i and Hugging Face (Hugging Face – The AI community building the future.), now offer low-cost fine-tuning methods accessible to the general public in a SaaS model. Training a model for malicious purposes, such as generating fake restaurant receipts, is now within reach of anyone who can download or share these tools. This raises concerns about a potential epidemic of deceptive models.” tells us Marc Pic, Innovation Director at Adanced Track & Trace.

Anybody with limited resources, from home, can in principle generate a realistic video of a political leader making any statement or being “caught” in suspicious activities. Or issue official-looking documents that could be then used to claim rights, to prove ownership or to disprove accusations, especially if such content is living in the digital world only.

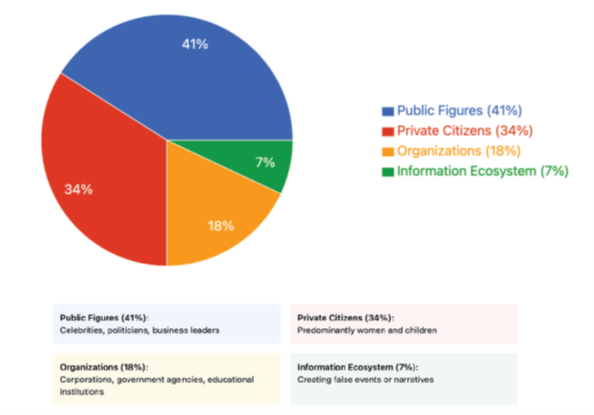

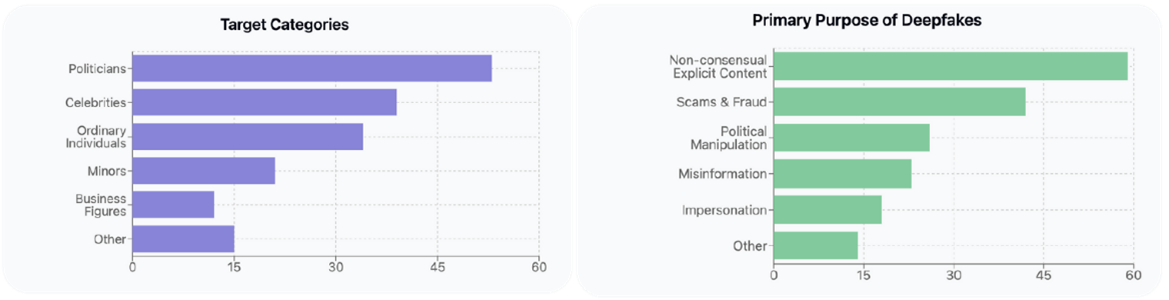

In a recent report¹, financial losses from AI Deepfakes amount to over $200 million in Q1 2025 alone, with the target demographics shown in the graphics below:

The above graphics don’t tell the other side of the story, but detection of Deepfakes is a key objective for both industry and government, and it’s again the AI technology that underpins the most efficient tools.

The Road to go

At present, several companies and research institutions are actively developing and offering such AI-powered deepfake detection tools. Examples include Resemble.ai (https://www.resemble.ai/) and Reality Defender (https://www.realitydefender.com/), which provides a platform for detecting synthetic media across various multimedia formats; Hive AI (https://thehive.ai/), which has developed a Deepfake Detection API capable of identifying AI-generated content in images and videos ; Intel’s FakeCatcher (Trusted Media: Real-time FakeCatcher for Deepfake Detection), which analyzes biological signals in videos to determine authenticity; Sensity AI (https://sensity.ai/), a platform for detecting various types of deepfakes with high accuracy ; and Facia (https://facia.ai/), which offers AI-powered deepfake detection solutions with a focus on facial analysis.

Interesting fact, at present a scam like the one in my first example are not detectable by such systems for the simple reason that the tools available today requires the data to be transmitted over the internet, while the fake phone call go through telecom operators and are therefore not processed by such tools (unless of course the operators themselves adopt the technology and apply it to all calls).

The U.S. government, through agencies like the Defense Advanced Research Projects Agency (DARPA Deepfake Defense Tech Ready for Commercialization, Transition ), has been at the forefront of investing in research and development of deepfake detection technologies through programs like Media Forensics and Semantic Forensics (SemaFor SemaFor: Semantic Forensics | DARPA). Similarly, the UK government has undertaken initiatives like the ACE Deepfake Detection Challenge (Launching the Deepfake Detection Challenge: a collaborative effort against digital deception – Accelerated Capability Environment) to foster collaboration between academia, industry, and government in developing practical detection solutions. Germany has launched a special initiative of the SPRIN-D Agency (in charge of disruptive innovation) with the aim to select and challenge 12 different technology providers for the detection of Deepfakes and related Image/Video/Audio manipulations, detecting characteristic elements of each generative AI model and their potential applications in various domains. In France, the CyberCampus is in charge of selecting projects through the PEPR Cybersecurity mechanism for a similar goal. Many governments and industry associations are likely following too.

Blockchain technology offers another avenue for enhancing the security and trustworthiness of government-issued information. By leveraging a decentralized and immutable digital ledger, blockchain can create tamper-proof records of official government information. Governments are exploring various applications of blockchain technology, including land registries, digital identity management, secure voting systems, supply chain management, and secure communication channels.

Let’s use our natural intelligence as well

Overall, we rediscover the same pattern of technological evolution: as new, innovative technologies are publicly available, new fraudulent schemes spread across the globe, while the same technological advance leads the industry and the governments to adopt new tools and strategies to fight them.

As the AI disruption promises to be one of the biggest in our era, let’s be prepared to invest the proper amount of energy and money to make sure that our next generation of documents (physical and digital) is appropriately protected.

It is likely that the good, old strategy of layering various technologies, rather than betting on a single one, will again be the best solution at our disposal. Having strong physical security elements on official document, strong training of officers and inspectors, coupled with AI-assisted detection tool, and possibly AI-assisted design tool, could be the best option for the next future.

Likely, AI will be both a threat and a powerful ally, but physical security still has its place and it may still be our last line of defense for some more time.

At least equally critical is the protection of any official digital content, which directly affects our opinion and trust as citizens. Here again, technology layering appears to be necessary when it comes to protection against deepfakes. The question, we believe, is not whether but when we will eventually trust AI technology over our own judgement, because manipulation will be all but indiscernible by our human capabilities. Technology shall always be accompanied by an appropriate set of governmental and industry policies, ideally adopted across all borders, and possibly allowing to fall back to the use of our natural intelligence if technology fails.

¹RESEMBLE.AI report “Q1 2025 Deepfake Incident Report: Mapping Deepfake Incidents.”